| Distributed

Coding of

Correlated Sources and Applications in Sensor Networks |

| Distributed Source

Quantizer Design |

Research Directions:

|

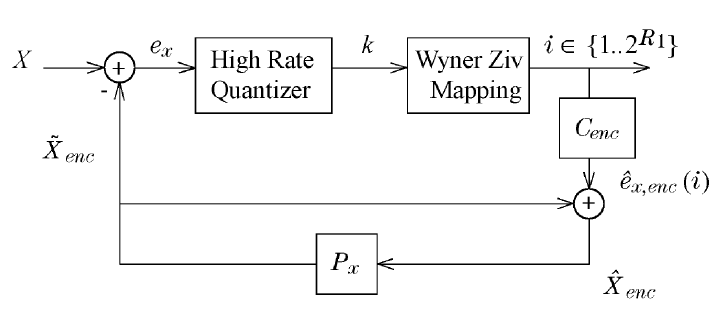

Distributed

Predictive Coding:

In real-world, data collected from sensors exhibits both temporal and inter-source correlations e.g., a network of video cameras monitoring a region. It may appear that the mature field of predictive coding can be combined in a straight-forward manner with distributed coding algorithms to utilize both temporal and inter-source correlations. But such a naive approach results in highly unstable and poor solutions. We identified the inherent "conflicts" between distributed and predictive coding and proposed different low complexity, low delay and stable distributed predictive coding schemes including the cases where drift (encoder/decoder mismatch) is inevitable but explicitly controlled. We proposed iterative descent algorithms for the design of distributed predictive coding (DPC ) systems. The first approach, namely, "zero-drift" DPC allows no mismatch between the encoder and decoder prediction error estimates. To utilize inter-source correlation more efficiently, the constraint of zero-drift is relaxed in the "controlled-drift" DPC approach. Simulation results show that the two proposed distributed predictive schemes perform significantly better than memoryless distributed coding and traditional single-source predictive coding schemes. Finally the controlled-drift DPC scheme offers additional gains over the zero-drift DPC scheme, especially for high inter-source correlations. Related publication: A. Saxena and K. Rose, ``Distributed predictive coding for spatio-temporally correlated sources,'' IEEE Transactions on Signal Processing, vol. 57, pp. 4066-4075, Oct. 2009.  A. Saxena and K. Rose, ``On distributed quantization in scalable and predictive coding,'' Sensor, Signal and Information Processing (SENSIP) Workshop, May 2008.  |

|

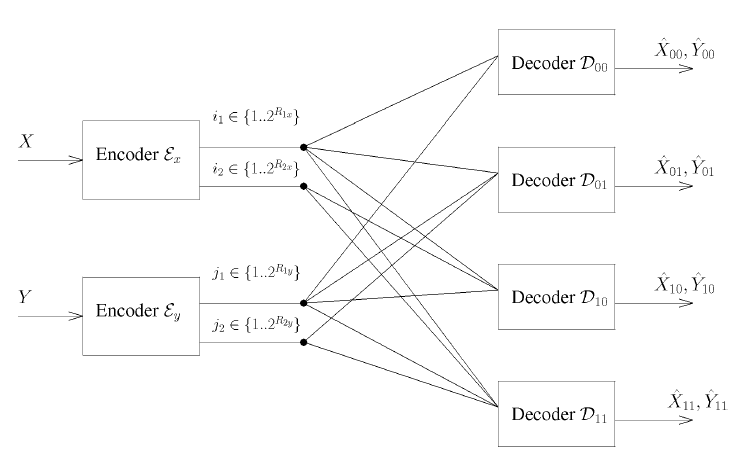

Distributed

Scalable Coding :

We considered the problem of distributed coding of correlated sources that are communicated to a central unit, a setting typically encountered in sensor networks. As communication channel conditions may vary with time, it is often desirable to guarantee a base layer of coarse information during channel fades, as well as robustness to channel link (or sensor) failures. Specifically, we considered two different cases for the scalable distributed coding problem: 1. Multi-Stage Distributed source coding (MS-DSC) : Similar to multi-stage coding for a single source, this special case of scalable distributed coding has low codebook storage requirement and lesser training set data requirements. However, when multi-stage coding is directly combined with distributed coding, the resulting naive algorithm yields poor rate-distortion performance, due to underlying conflicts between the objectives of these two coding methods. We proposed and appropriate system paradigm for MS-DSC, which allowed such tradeoffs to be explicitly controlled. 2. Scalable distributed source coding (S-DSC): Next, we considered the unconstrained scalable distributed coding problem. Although a standard ‘Lloyd-style’ distributed coder design algorithm is easily generalized to encompass scalable coding, the algorithm performance is heavily dependent on initialization and will virtually always converge to a poor local minimum. We proposed an effective initialization scheme for such a system, which employs a properly designed MS-DSC system. We also proposed iterative design techniques and derived the necessary conditions for optimality for both multistage and unconstrained scalable distributed coding systems. Related publication: A. Saxena and K. Rose, ``On scalable distributed coding of correlated sources,'' IEEE Transactions on Signal Processing, vol. 58, no. 5, pp. 2875 - 2883, May 2010.  A. Saxena and K. Rose, ``Scalable distributed source coding,'' Proc. IEEE International Conference on Acoustics Speech and Signal Processing (ICASSP), pp. 713-716, April 2009. (Best Student Paper Finalist --- Top 1%).  A. Saxena and K. Rose, ``Distributed multi-stage coding of correlated sources,'' Proc. IEEE Data Compression Conference (DCC), pp.312-321, March 2008.  |

|

Globally

optimal design of distributed quantizers by deterministic annealing:

The code design for the encoders at the sensor nodes and the decoder at the gateway node should be done jointly. An iterative technique such as Generalized Lloyd Algorithm can be used for the joint design. However such an algorithm is highly susceptible to initialization and may converge to a poor local minimum on the distortion-cost surface. In this case too there is need for a global optimization tool such as deterministic annealing (DA) for the code design. We have developed a DA-based approach for distributed source coding. Further the system should be robust to failure of sensor node or communication channel. We developed and tested an extended DA-based algorithm for distributed source coding that is robust to sensor/channel failure Related publications: A. Saxena, J. Nayak, and K. Rose,`` Robust distributed source coder design by deterministic annealing,'' IEEE Transactions on Signal Processing, Vol. 58, Issue 2, pp 859 - 868, Feb 2010.  |

|

Event

based compression for Sensor networks:

In a sensor network, bulk of the data collected by the sensors is typically un-interesting, with occasional spurts of data that correspond to interesting events. It thus makes sense to heavily compress the un-interesting data before transmission, while still maintaining higher fidelity transmission for the event data. Motivated by this event-based paradigm for compression in sensor networks, we formulated the problem of correlated source coding under a cost criterion that is statistically conditioned on event occurrences. We proposed a scheme in which sensors optimally compress and transmit the data to the central unit so as to minimize the expected distortion in segments containing events. We also demonstrated the approach in the setting of entropy constrained distributed vector quantizer design, using the modified distortion criterion that appropriately accounts for the joint statistics of the events and the observation data. Related publications: J. Singh, A. Saxena, K. Rose and U. Madhow, ``Optimization of correlated source coding for for event-based compression in sensor networks,'' Proc. IEEE Data Compression Conference, pp. 73-82, March 2009.  |

|

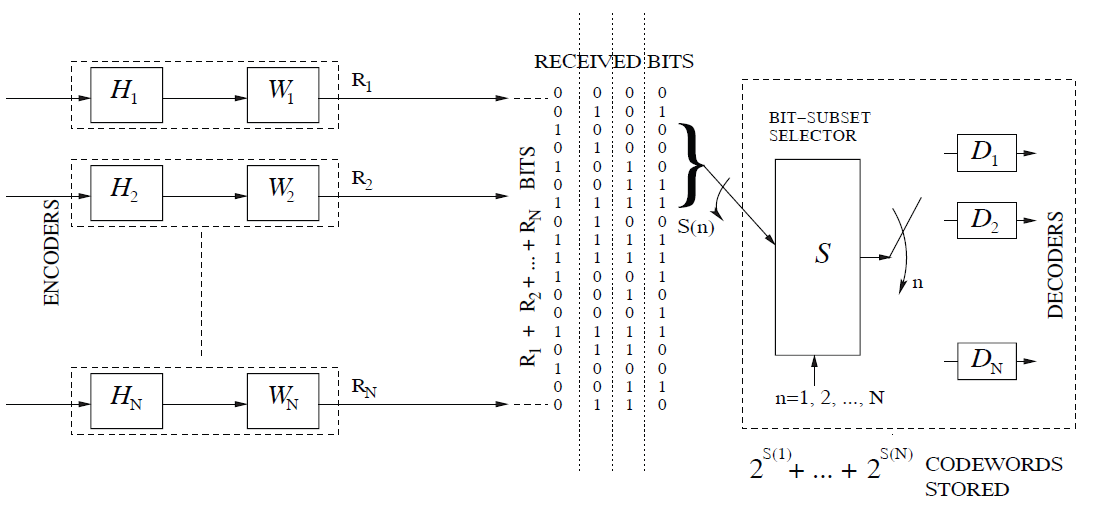

Large

scale distributed coding:

We are interested in the problem of scaling distributed coding to a large number of sources. A typical feature of traditional design of distributed systems is the exponential growth of decoder codebook size with the number of sources in the network. This growth in complexity renders traditional approaches to distributed coder design impractical for even moderately sized sensor networks. Just to illustrate, consider 20 sensors, transmitting information at 2 bits per sensor. The base station receives 40 bits and has to estimate all the sources. This implies that the decoder has to maintain a codebook of size 20 x 240 , which requires about 175 Terabytes of memory! In general, for N sources transmitting at R bits, the total decoder codebook size would be N x 2NR. The requirement for such a large codebook makes the problem of designing optimal low-storage distributed coders a very important problem with vast practical significance. Inspired by the recent results on fusion coder design, we proposed a new approach to scale distributed coder for a very large number of sources. Central to our approach is a bit-subset selector module which selectively determines the subset of received bits to be used for decoding each source. In this manner, we explicitly trade codebook size at the decoder vs. distortion. Results on both synthetic and real world sensor network datasets show considerable gains over naive source-grouping methods. Related publications: S. Ramaswamy, K. Viswanatha, A. Saxena and K. Rose, ``Towards large scale distributed coding'', Proc. IEEE International Conference on Acoustics Speech and Signal Processing (ICASSP), March 2010.  |

|

Error/Erasure resilient

distributed coding

A

major obstacle which poses an existential threat on practical

deployment of distributed source codes in real world sensor networks is

the critical requirement for error-resilience given the severe channel

conditions in many wireless sensor networks. Unfortunately, the codes

designed for a noise-less framework provide extremely poor error

resilience and cannot be deployed directly in a noisy setting.

Motivated by this challenge, we proposed a unified approach for large

scale, error-resilient distributed source coding. The design of such

error-resilient DSC is a very challenging problem, as the objectives of

DSC and channel coding are counter-active in the sense that one tries

to eliminate dependencies, while the other tries to correct errors

using the dependencies. On the one hand, the system could be made

compression centric and designed to exploit inter-source correlations

analogous to the noiseless framework. However, this reduces the

dependencies among the transmitted bits leading to poor

error-resilience at the decoder and eventually poor reconstruction

distortions. On the other extreme, the encoders could be designed to

preserve all the correlations among the transmitted bits which could be

exploited at the decoder to achieve good error resilience. However,

such a design fails to exploit the gains due to distributed compression

leading to poor over all rate-distortion performance. A

major obstacle which poses an existential threat on practical

deployment of distributed source codes in real world sensor networks is

the critical requirement for error-resilience given the severe channel

conditions in many wireless sensor networks. Unfortunately, the codes

designed for a noise-less framework provide extremely poor error

resilience and cannot be deployed directly in a noisy setting.

Motivated by this challenge, we proposed a unified approach for large

scale, error-resilient distributed source coding. The design of such

error-resilient DSC is a very challenging problem, as the objectives of

DSC and channel coding are counter-active in the sense that one tries

to eliminate dependencies, while the other tries to correct errors

using the dependencies. On the one hand, the system could be made

compression centric and designed to exploit inter-source correlations

analogous to the noiseless framework. However, this reduces the

dependencies among the transmitted bits leading to poor

error-resilience at the decoder and eventually poor reconstruction

distortions. On the other extreme, the encoders could be designed to

preserve all the correlations among the transmitted bits which could be

exploited at the decoder to achieve good error resilience. However,

such a design fails to exploit the gains due to distributed compression

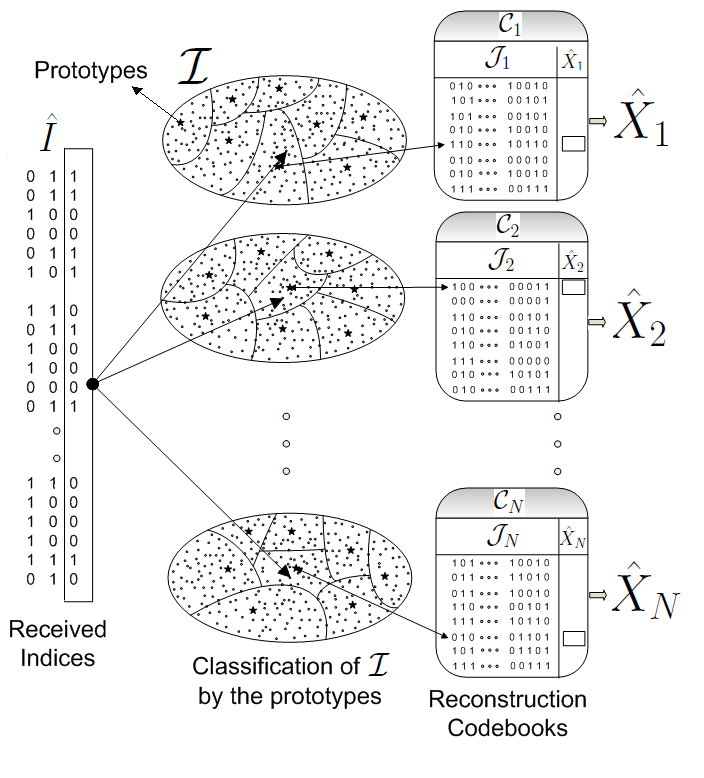

leading to poor over all rate-distortion performance. We proposed a new decoding approach, based on an optimally designed classifier-based framework, which builds in inherent error-resilience into the system and provides explicit control over decoding complexity. Essentially, to decode each source, we partition the space of received bit-tuples using a nearest neighbor quantizer at a decoding rate consistent with the allowed complexity and each partition is then mapped to a reconstruction value for that source. We also proposed a deterministic annealing (DA) based global optimization algorithm for the design due to the highly non-convex nature of the cost function, which further improves the performance over basic greedy iterative descent technique. Simulation results on various synthetic and real sensor network datasets demonstrate significant gains of the proposed technique over other state-of-the art approaches with the gains monotonically increasing with the network size. Related publications: K. Viswanatha, S. Ramaswamy, A. Saxena and K. Rose, ``A

classifier based decoding approach for large scale distributed coding",

Proc. IEEE International Conference on Acoustics Speech and Signal

Processing (ICASSP), May 2011. |

SCL's Source coding and quantization archive